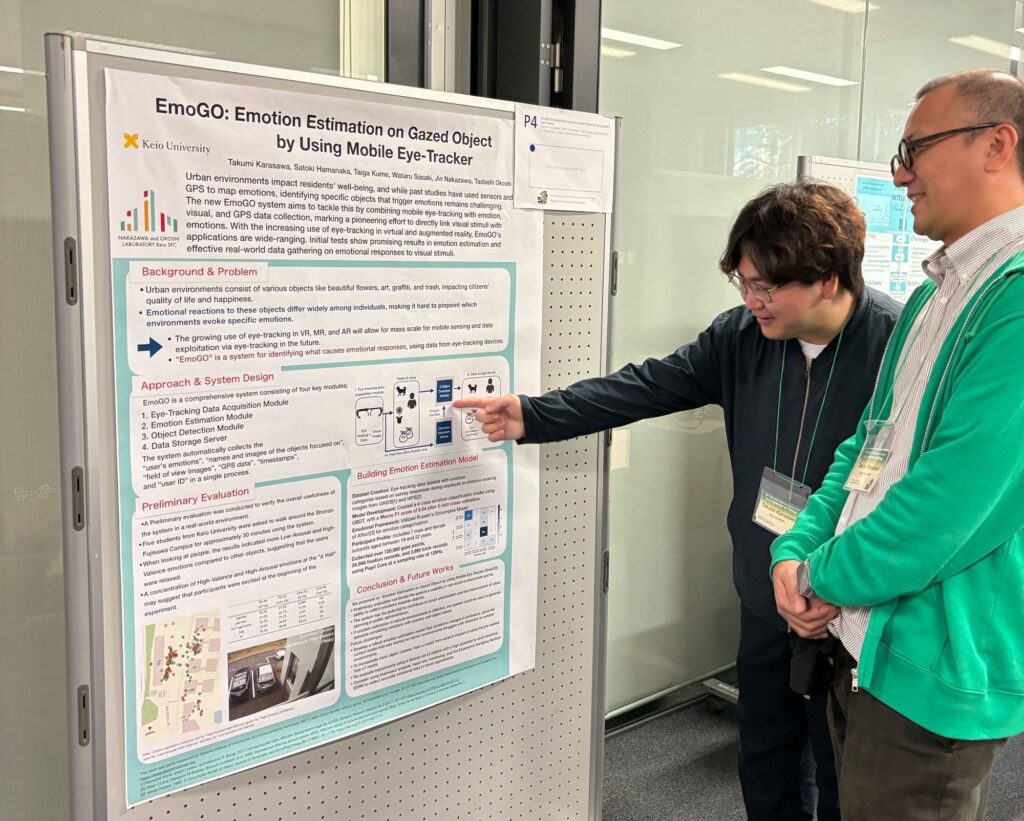

修士1年生柄澤君が研究内容をIoT2023でポスター発表しました.また,本研究成果は,IoT2023のBest Poster Runners-up Award を受賞しました.

Abstract:

Urban environments consist of diverse elements influencing residents’ quality of life and emotional well-being. Prior research has attempted to map citizens’ emotions using sensors and GPS data, but it remains difficult to pinpoint environmental triggers that evoke specific emotions of them. To solve this problem, we introduce EmoGO, a novel system that integrates mobile eye-tracking with emotion, visual, and GPS data collection. To our knowledge, this approach is the first study to uniquely correlate specific visual stimuli with emotions. Given the rising prevalence of eye-tracking in VR, MR, and AR technologies, EmoGO’s potential applications are substantial. Our preliminary evaluation resulted in a promising result in emotion estimation on our original dataset, and also showed that the system can effectively collect real-world data on objects and associated emotions.

Karasawa, T., Hamanaka, S., Kume, T., Sasaki, W., Nakazawa, J., & Okoshi, T. (2023, November). EmoGO: Emotion Estimation on Gazed Object by Using Mobile Eye-Tracker. In Proceedings of the 13th International Conference on the Internet of Things (pp. 158-161).